Is Your Data Decision-Ready?

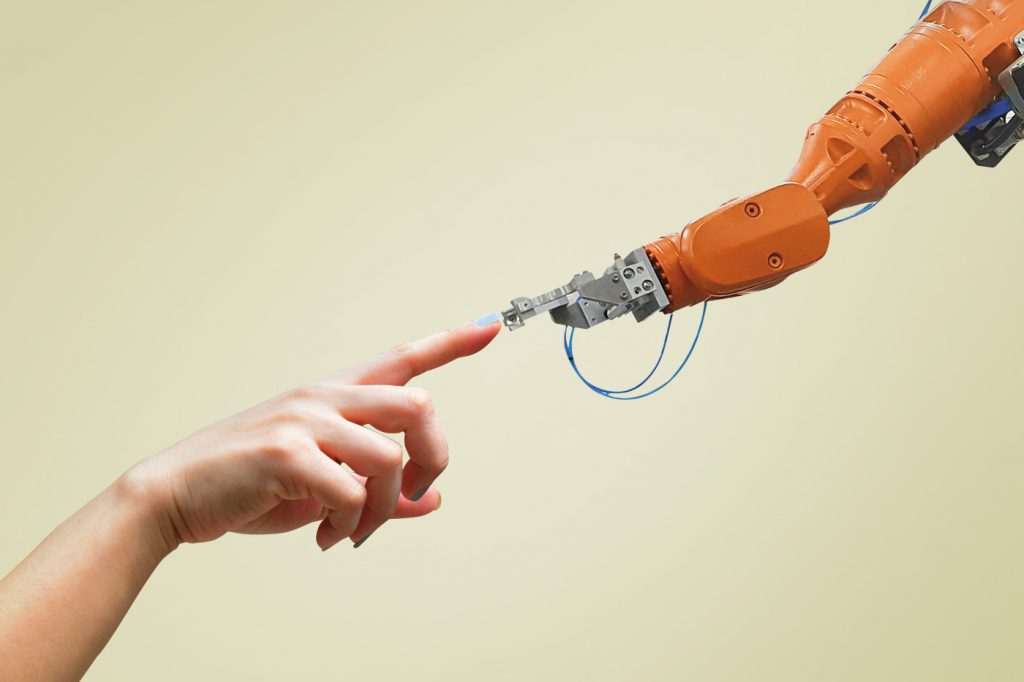

Pure reliance on tech isn’t enough. It’s time to blend the best of humans and machines for optimal outcomes.

By Julia Chong

Anyone can make a decision, but the best managers and executives know that there is more than meets the eye when it comes to making the right decision.

With so much focus on tech and digital, it’s easy to get carried away with the feeling that data is the be-all and end-all, but data should not be mistaken for knowledge. The most effective managers and decision-makers still possess that rare, sparkling quality of piercing through the ‘white noise’ of data to derive true insights – a blended, middle-path approach that straddles both worlds, combining the emotional quotient of human intuition with the hyperscale of tech.

This blended approach, touted as one of the future trends in data and finance, is designed to glean the most from data analytics. It isn’t enough to just have data and dashboards; what’s needed is analytics that are grounded in business reality, making it decision-ready. Global IT research firm Gartner’s Clement Christensen, Senior Principal Advisor, states in a blog post: “Business leaders largely agree that data from finance are often out-of-date, inconsistent, or incomplete. Finance must think more broadly about making data decision-ready.”

The IT firm posits that decision-ready data will be the next business imperative for financial institutions seeking to gain or retain its competitive advantage in the coming decade: “Progressive organisations are already complementing the best of human decision-making capabilities with the power of data and analytics and artificial intelligence (AI) — to create opportunities to fundamentally change what they do. The quality of the decisions being made by these data-driven organisations is giving them a competitive edge, especially on digital initiatives.

“Decisions today must instead be connected, contextual, and continuous — not through some academic exercise in decision theory, but by creating a truly symbiotic relationship between humans and machines to generate the optimal action.”

In Peter Drucker’s classic 1967 Harvard Business Review article, The Effective Decision, the management guru wrote:

“Effective executives do not make a great many decisions. They concentrate on what is important. They try to make the few important decisions on the highest level of conceptual understanding. They try to find the constants in a situation, to think through what is strategic and generic rather than to ‘solve problems’. They are, therefore, not overly impressed by speed in decision-making; rather, they consider virtuosity in manipulating a great many variables a symptom of sloppy thinking. They want to know what the decision is all about and what the underlying realities are which it has to satisfy. They want impact rather than technique. And they want to be sound rather than clever.

“Decision-makers need organised information for feedback. They need reports and figures. But unless they build their feedback around direct exposure to reality — unless they discipline themselves to go out and look — they condemn themselves to a sterile dogmatism.”

One promising and emerging source for data speed and accuracy is the burgeoning field of quantum computing, currently deployed at some of the world’s major banks in collaboration with technology firms. Meant to overcome the shortcomings (and hype) of AI and machine learning (embedded bias and the lack of diversity in problem solving are just some drawbacks which come to mind), our feature on Quantum Computing: Finance’s Next Frontier on page 26 is a must-read.

These rapid-fire digital tools, which put data at our fingertips, have grown exponentially in both number and complexity. Nonetheless, stripped to the bare basics, the fundamentals of what constitutes an effective decision haven’t changed that much. All that is required of managers and executives is to update their references and gain a few extra tools to incorporate new tech and data points into their everyday decisioning.

Gartner’s 5 Steps to Integrate Human and Machine Insights is one such handy tool.

A note of advice, specifically for leaders in finance who have been traditionally trained to adhere to data accuracy at all cost: be prepared to forego some level of data accuracy in this next wave of future-proofing.

According to the research firm’s 2019 Data Management Model Survey, unsurprisingly, 73% of finance functions favour a centralised, tightly governed source for data over decentralised structures for information management. Whilst highly desirable in order to achieve a governance mandate, it may not be the best way to achieve business goals in the new future of banking, where decisions must be made as data rolls in and the goal post looks more like a moving target.

This isn’t to say that executives should forego all standards of accuracy; rather, as long as data fidelity is maintained, then an acceptable level (below 100% data accuracy) is sufficient to make an effective decision.

The benefit of this ‘sufficient versions of the truth’ strategy can be significant. The survey reports organisations that pursue this strategy – as opposed to the ‘single source of truth’ approach – are 2x more likely to improve the quality of decision-making and business outcomes. This may be incentive enough to get executives to rethink their approach and steer clear of Drucker’s “sterile dogmatism” to successfully future-proof banks.

Julia Chong is a researcher and writer with Akasaa, a boutique content development firm with presence in Malaysia, Singapore, and the UK.