Careful, Your Bias is Showing

If you’re into AI, this one’s for you.

By Julia Chong

In Luxembourg, it wasn’t until 1972 that a woman could open a bank account or apply for a loan in her own name without her husband’s signature. Thus, women as a population are unlikely to be well represented in historical Luxembourgian banking datasets.

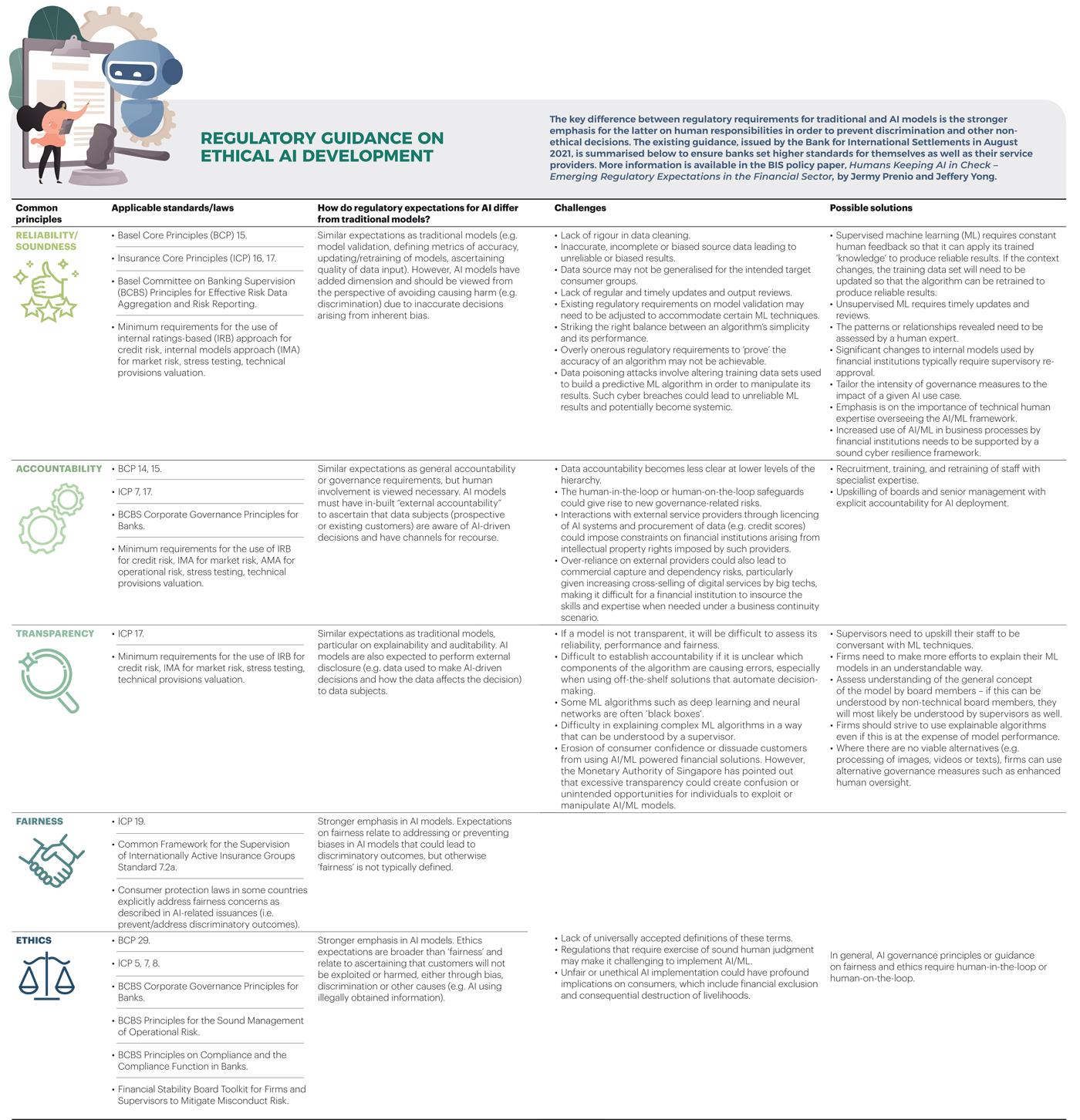

In recent times, Luxembourg’s financial regulator, Commission de Surveillance du Secteur Financier, warned market players of bias in artificial intelligence (AI) systems, including the use of historical data such as the above if it has not been debiased before it is used to train intelligent systems.

The white paper, Artificial Intelligence: Opportunities, Risks and Recommendations for the Financial Sector, provides this example. Suppose a developer creates an AI-based credit scoring algorithm for a bank using historical data. Without taking into account the history of banking legislation in the coding of the algorithm, the modern women of Luxembourg could likely be disadvantaged by unfavourable credit scores compared to men. This is because in the past, women applicants were not granted many loans and unless the algorithm was adjusted to account for the dataset bias that existed prior to 1972, the automated credit score could disproportionately penalise women in the form of higher interest rates, fewer product options, or even denial of access to financial services.

The paper also highlights that “complex models may result in a lack of transparency for customers, to which it would be difficult to explain why a credit request was rejected…Also, if high volumes of credit decisions are automated, errors eventually included in the model would be amplified. Similarly, if a few models developed by external providers gain large adoption, design flaws or wrong assumptions contained in the models may have systemic effects.”

The commission also highlights that under the EU’s General Data Protection Regulation, customers have the right “not to be subject to a decision based solely on automated processing”.

Here, we see that even though it is possible to automate judgments to speed up simple banking processes such as credit scoring, risks can arise from a number of sources. In certain jurisdictions, consent is also a prerequisite.

Bias is built into our human DNA and bleeds into all aspects of our creation, including autonomous products and systems. It is not possible to eliminate bias, but we can mitigate it by being self-aware and observing basic standards of care.

In Mitigating Bias in Artificial Intelligence: An Equity Fluent Leadership Playbook, published by the Center for Equity, Gender and Leadership at the Haas School of Business at the University of California, Berkeley, there are various points at which bias can enter the AI value chain:

+ Dataset: Data is assumed to accurately reflect the world, but there are significant data gaps, including little or no data coming from particular communities and data is rife with racial, economic, and gender biases. A biased dataset can be unrepresentative of society by over or under-representing certain identities in a particular context. Biased datasets can also be accurate but representative of an unjust society. In this case, they reflect biases against certain groups that are reflective of real discrimination that the particular group(s) face(s).

+ Algorithm: Bias can creep in when defining the purpose of an AI model and its constraints, when selecting the inputs it should consider or when selecting the inputs the algorithm should consider to find patterns and draw conclusions from the datasets. An algorithm can also contribute to discriminatory outputs, irrespective of the quality of the datasets used, depending on how it is evaluated.

+ Prediction: There is potential for inaccurate predictions and bias if an AI system is used in a different context or for a different population from which it was originally developed or if it is applied for different use cases from which it was originally developed/operationalised. AI systems can be used or altered by organisations or individuals in ways that can be deemed as discriminatory for certain populations. This can be due to bad actors getting a hold of and using the technology. In other cases, it may be less overt and subject to debates over fairness. For AI systems that support human decision-making, how individuals interpret the machine’s outputs can be informed by one’s own lived experience.

One of the more insightful interviews in recent times on debiasing AI took place at EmTech Digital 2022, MIT Technology Review’s annual conference on AI. The work of Dr Nicol Turner Lee, Director of the Center for Technology Innovation at the Brookings Institution, has been steadily gaining ground. As a vocal advocate for more enlightened AI, her goal is to squeeze inequality out of the equation by reminding scientists and practitioners to be honest.

“I tell everybody you need a social scientist as a friend. I don’t care who you are – a scientist, an engineer, a data scientist. If you don’t have one social scientist as your friend, you’re not being honest to (sic) this problem, because what happens with that data? It comes with all of that noise and despite our ability as scientists to tease out or diffuse the noise, you still have the basis and the foundation for the inequality.

“So, one of the things I’ve tried to tell people [is that] it’s probably okay for us to recognise the trauma of the data that we’re using. It’s okay for us to realise that our models will be normative in the extent to which there will be bias, but we should disclose what those things are.

“That’s where my work in particular has become really interesting to me…What part of the model is much more injurious to respondents and to outcomes? What part should we disclose that we just don’t have the right data to predict accurately without some type of risk to that population?”

The discussion invariably led to the subject of regulating the risks of AI. This question, posed by an audience member, reflects the frustration of developers and innovators who battle with less tech-savvy bureaucrats: “How do we engage and help legislators understand the real risks and not the hype that is sometimes heard or perceived in the media?”

Dr Turner Lee’s response: “I think you’re right that policymakers should actually define the guardrails, but I don’t think they need to do it for everything.”

“I think we need to pick those areas that are most sensitive, what the EU has called ‘high risk’. Maybe we might take from that some models that help us think about what’s high risk, where should we spend more time, and potentially with policymakers, where should we spend time together?

“I’m a huge fan of regulatory sandboxes when it comes to co-design and co-evolution of feedback…but I also think, on the flip side, that all of you have to take account for your reputational risk.”

Views of how AI should be supervised are very similar to discussions about regulating fintech. Commenting on the fast-and-loose minting and volatile trading of cryptocurrency, Eswar Prasad opined in his recent FT article Crypto Poses Serious Challenges for Regulators, that “when an industry clamours for regulation, it typically craves the legitimacy that comes with it, while trying to minimise oversight. That is the biggest risk regulators must guard against — giving the crypto industry an official imprimatur while subjecting it to light-touch regulation.”

During the 2018 International Monetary Fund (IMF)/World Bank Bali Fintech Agenda, whilst President Joko Widodo was in favour of a “light-touch safe harbour” approach to ensure fintech and entrepreneurs were not curbed in their innovations, the then IMF Managing Director Christine Lagarde was sceptical.

“I am a little bit concerned when I hear…talk about the ‘light touch’,” she said, “because it reminds me of the soft-touch regulation we had just before the crisis in 2008.”

“We point very directly to the risks of, what I would call as a former lawyer, ‘forum shopping’. In other words, if there is no international cooperation, if there is no adherence to the Bali [Fintech] Agenda key elements, then there is a risk that somewhere, somehow, the regulatory environment will be conducive to an abuse of the system. It will be the leak through which unwanted, unnecessary business is being conducted.”

Lagarde, and others such as then Bank of England Governor, Mark Carney, propounded that regulation, especially in high-yield and innovative sectors in fintech, should be proportionate to its risks. Proportionality in banking regulation promotes stability, whilst keeping regulatory burden and compliance costs at a minimum. It is in a constant tug-of-war with ‘light touch’ reforms that do not shield citizens from financial instability and bank bailouts.

If banking has emerged relatively unscathed by the Covid-19 pandemic, it is because of decades-long reforms under the Basel framework that are anchored in the concept of proportionality. Perhaps what could be made clearer to developers and innovators is that regulators are not expected to be subject matter experts; they exist to maintain the soundness and safety of the financial system on behalf of their citizens.

Supervisory authorities are concerned with specific challenges, such as defining the tiering criteria for applicable laws, achieving a level playing field, and minimising regulatory arbitrage. The truth is that it is incumbent upon developers to do their due diligence and not wait for a prescriptive rules-based regime to decide what’s right and what’s wrong.

After all, isn’t that what autonomy is about?

Julia Chong is a content analyst and writer at Akasaa, a boutique content development and consulting firm.